How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase

$ 6.99 · 4.5 (318) · In stock

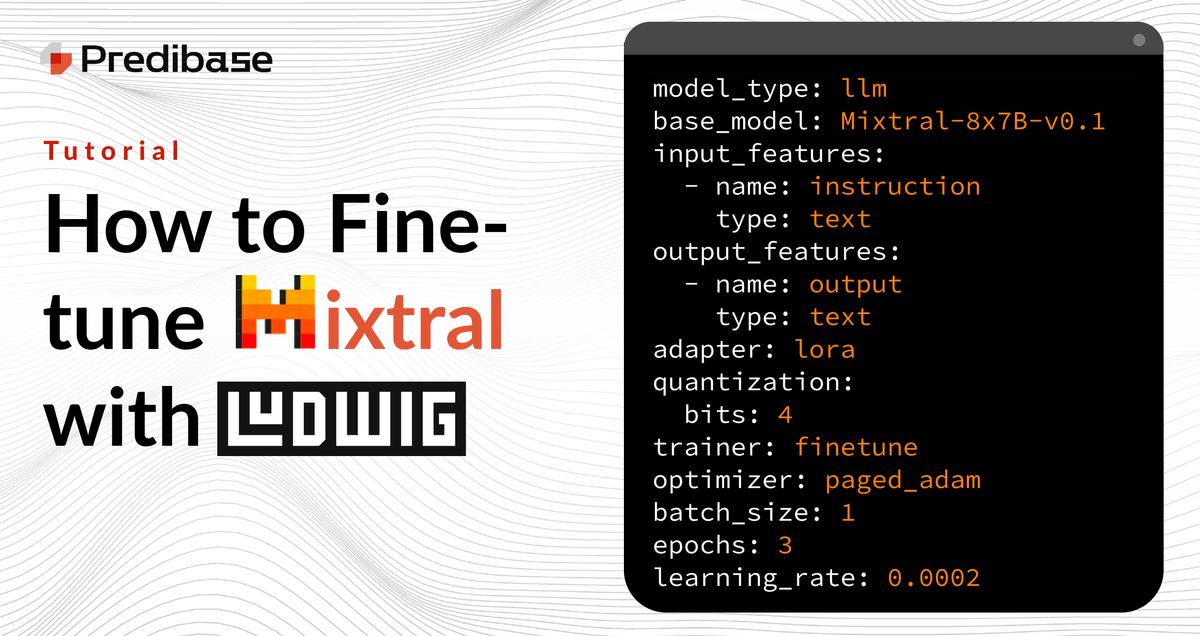

Learn how to reliably and efficiently fine-tune Mixtral 8x7B on commodity hardware in just a few lines of code with Ludwig, the open-source framework for building custom LLMs. This short tutorial provides code snippets to help get you started.

Predibase on LinkedIn: Low-Code/No-Code: Why Declarative

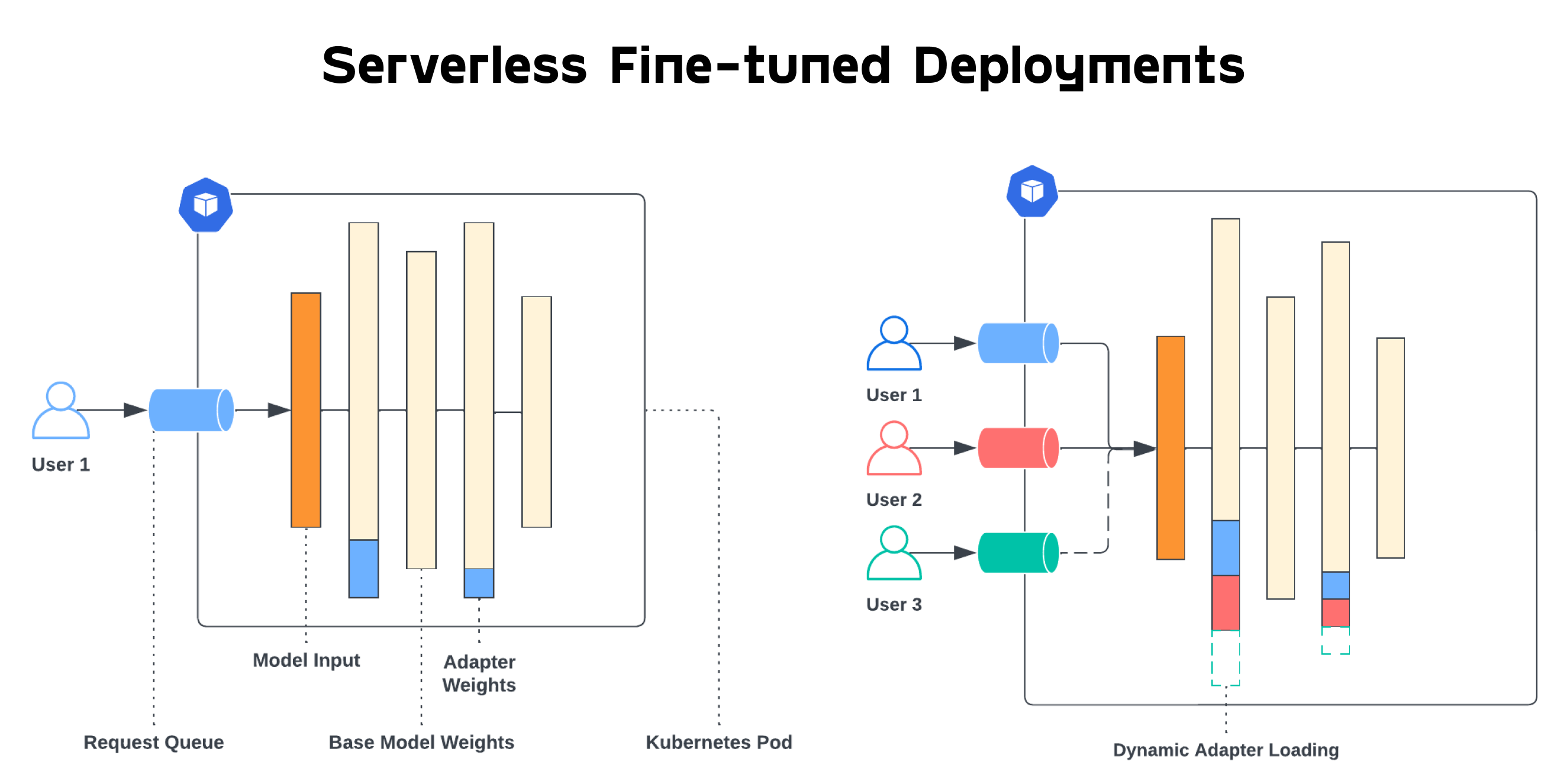

Introducing the first purely serverless solution for fine-tuned

Deep Learning – Predibase

Fine-tune Mixtral 8x7B (MoE) on Custom Data - Step by Step Guide

GitHub - predibase/llm_distillation_playbook: Best practices for

Predibase on LinkedIn: 19 top venture capitalists to know that

Ludwig (@ludwig_ai) / X

50x Faster Fine-Tuning in 10 Lines of YAML with Ludwig and Ray

Kabir Brar (@_ksbrar_) / X

Ludwig 0.5: Declarative Machine Learning, now on PyTorch

Deep Learning – Predibase